Web browsers can now run large language models without server support, thanks to npm AI packages. Developers building AI-powered applications have seen amazing progress in their available tools. Web LLM and Wllama let us create interactive web apps with built-in chat features that run completely on the client side. Wllama had just 91 GitHub stars as of May 10th, 2024.

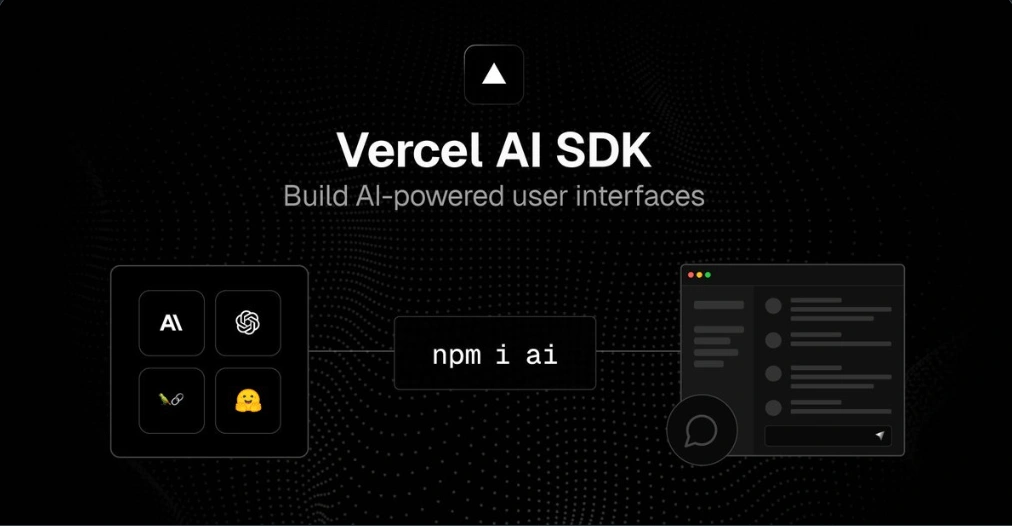

The npm AI package ecosystem has grown substantially. EnergeticAI offers optimized TensorFlow.js versions that work in serverless environments. The Vercel AI SDK, an open-source npm AI SDK, lets developers build conversational interfaces using JavaScript and TypeScript. It supports popular frameworks like React/Next.js and Svelte/SvelteKit. This SDK has built-in adapters for OpenAI, LangChain, and Hugging Face Inference that help create advanced streaming experiences. Security remains a concern though – researchers found malicious npm packages targeting Cursor on macOS that users downloaded more than 3,200 times.

This piece will show you everything about npm AI packages that can change your applications. You’ll learn about setting up your development environment and deploying flexible AI solutions.

Setting Up Your Smart App Environment with npm AI SDKs

Image Source: LinkedIn

Setting up a development environment for AI-powered applications needs careful selection of npm packages that line up with your project’s needs. Let’s look at everything needed to create smart apps with JavaScript.

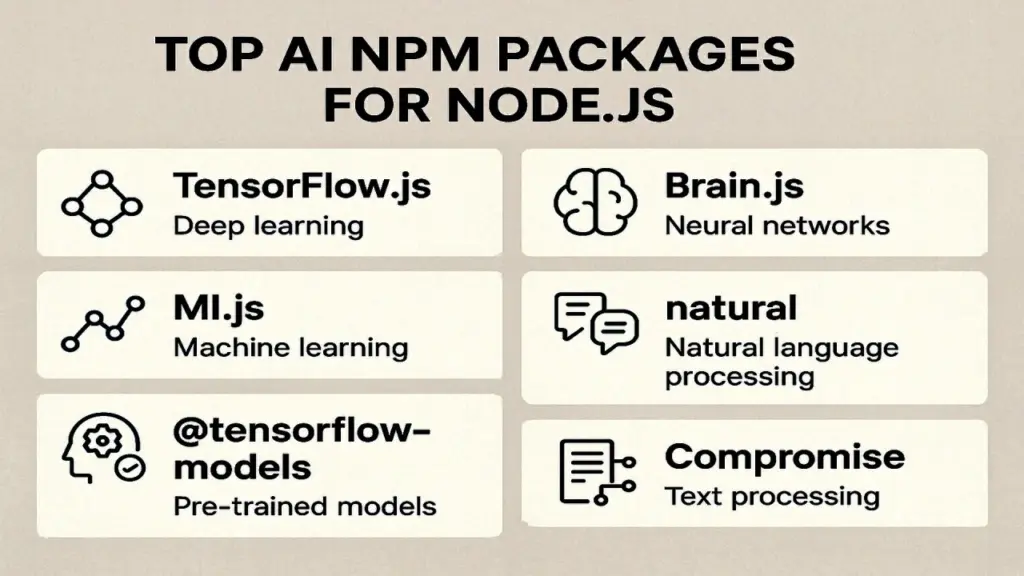

Installing npm ai packages: tensorflow, brain.js, natural

The right tools are crucial when you start AI development in JavaScript. TensorFlow.js gives you powerful capabilities through npm:

npm install @tensorflow/tfjsYou can install the specialized package if you need GPU acceleration in Node.js environments:

npm install @tensorflow/tfjs-node-gpuBrain.js gives you a simpler approach for neural networks with a user-friendly API:

npm install brain.jsBrain.js ranks as the second most used JavaScript AI library after TensorFlow. This makes it a great choice for beginners. The Natural package helps with natural language processing tasks:

npm install naturalChoosing Between Browser and Node.js Execution

Your AI application’s performance depends on the execution environment. Browser-based applications run directly in the client’s browser without server support. All the same, browsers can’t access filesystem functionality that Node.js provides.

Node.js for AI execution gives you several benefits:

- Environment control: You know the exact Node.js version that will run your application

- Support for modern JavaScript: Write ES2015+ code without compatibility concerns

- Module system flexibility: Use both CommonJS (

require()) and ES modules (import)

OpenAI integration needs different configuration approaches in both environments. Node.js serverless functions need authentication setup by importing modules and creating configuration instances.

Environment Setup for Edge and Serverless Deployment

Serverless computing reshapes the scene of AI deployment. You don’t need to manage the underlying infrastructure. Edge deployments need proper runtime specifications in your package.json:

{

"dependencies": {

"ai": "^3.1.0",

"@ai-sdk/openai": "latest"

}

}API keys stay secure in a .env file through environment variables:

OPENAI_API_KEY=your_api_key_here

Edge computing with serverless principles improves distributed AI applications. This reduces data transfer needs and makes the overall system perform better. Vercel Edge Runtime deployment gives your application low-latency AI processing closest to your users.

Node.js 18+ and proper authentication through environment variables are essential to utilize serverless deployment for npm openai SDK integration. Hard-coding sensitive information in your application should be avoided.

Building Chat Interfaces with Vercel AI SDK

Image Source: Superpower Daily

The Vercel AI SDK makes building interactive chat interfaces simple. This powerful npm AI package helps you create conversational UIs. You can connect it with AI providers of all types while it handles the complex parts of up-to-the-minute communication.

Using useChat() for Real-time Conversations

The useChat() hook is the foundation of chat interface development in the Vercel AI SDK. This hook brings three main benefits: message streaming in real-time, automatic state management, and easy design integration.

const { messages, input, handleInputChange, handleSubmit } = useChat();Developers can access complete state management for inputs, messages, error handling, and status tracking with this single hook. The hook shows different statuses like submitted, streaming, ready, and error. Each status represents a different stage of the chat process. These statuses help create responsive UIs that show when the AI processes information.

StreamingTextResponse for Token-by-Token Output

Users stay more engaged when they see AI responses as they generate instead of waiting for full messages. The SDK does this through token streaming support for chat models like GPT-4o-mini that work with this feature.

const stream = await agent.stream(

{messages: [{role: "user", content: "What's the weather?"}]},

{streamMode: "messages"}

);You can use this streaming feature with many providers and customize it based on what your application needs. The SDK lets you turn off streaming for specific nodes by adding a “nostream” tag.

Callbacks for Prompt Logging and Completion Storage

The SDK comes with key event callbacks that handle different parts of the chat lifecycle:

onFinish: Runs when the assistant message endsonError: Starts when request errors happenonResponse: Begins when the API sends a response

These callbacks let you add features like prompt logging, response storage, and analytics. You can track conversations and make the AI work better over time.

The experimental_prepareRequestBody option gives you more control over server data. You can send only what you need instead of the whole message history. This helps reduce bandwidth usage.

Integrating NLP and Classification with Natural and Limdu.js

Image Source: GeeksforGeeks

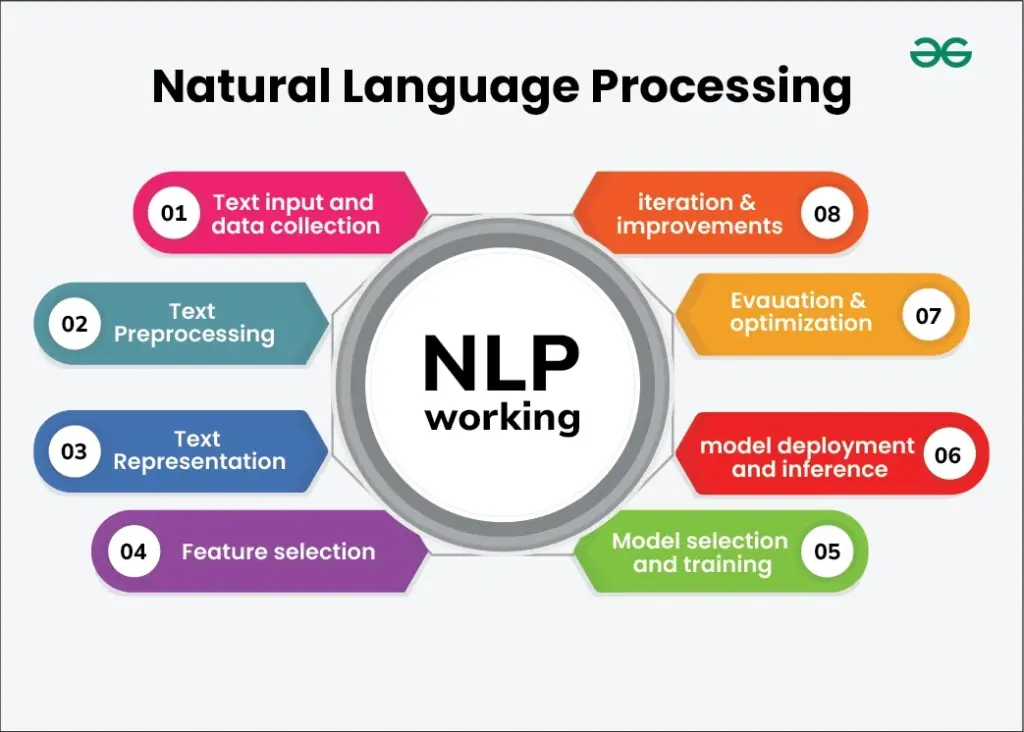

Natural language processing creates new possibilities for npm AI packages. Developers can now build applications that understand and process human language. Natural and Limdu.js emerge as two standout libraries in this space.

Tokenization and Stemming with natural

Natural is a complete npm AI package with simple NLP capabilities. You can install it through npm:

npm install naturalText analysis depends on tokenization – breaking text into individual words or tokens. Natural makes this process simple:

const natural = require('natural');

const tokenizer = new natural.WordTokenizer();

const tokens = tokenizer.tokenize("The quick brown fox jumps over the lazy dog");

// Output: [ 'The', 'quick', 'brown', 'fox', 'jumps', 'over', 'the', 'lazy', 'dog' ]Stemming reduces words to their root forms after tokenization. To name just one example, see how “cats,” “catlike,” and “catty” become “cat.” Natural offers multiple stemming algorithms:

// Porter stemmer (less aggressive)

console.log(natural.PorterStemmer.tokenizeAndStem("I can see that we are going to be friends"));

// Output: [ 'go', 'friend' ]Immediate Classification with limdu.classifiers.NeuralNetwork()

Limdu.js shines at classification tasks. This classifier processes data without retraining the entire model:

const limdu = require('limdu');

const colorClassifier = new limdu.classifiers.NeuralNetwork();

colorClassifier.trainBatch([

{input: { r: 0.03, g: 0.7, b: 0.5 }, output: 0}, // black

{input: { r: 0.16, g: 0.09, b: 0.2 }, output: 1}, // white

{input: { r: 0.5, g: 0.5, b: 1.0 }, output: 1} // white

]);

console.log(colorClassifier.classify({ r: 1, g: 0.4, b: 0 })); // 0.99 - almost whiteMulti-label and Online Learning Capabilities

Limdu.js stands out with its multi-label classification capabilities. The system assigns multiple categories to one input, unlike single-label classifiers:

const MyWinnow = limdu.classifiers.Winnow.bind(0, {retrain_count: 10});

const intentClassifier = new limdu.classifiers.multilabel.BinaryRelevance({

binaryClassifierType: MyWinnow

});

intentClassifier.trainBatch([

{input: {I:1,want:1,an:1,apple:1}, output: "APPLE"},

{input: {I:1,want:1,a:1,banana:1}, output: "BANANA"}

]);

console.dir(intentClassifier.classify({I:1,want:1,an:1,apple:1,and:1,a:1,banana:1}));

// Output: ['APPLE','BANANA']Limdu.js goes beyond simple classification and supports “explanations” that show classification derivation:

const bayesClassifier = new limdu.classifiers.Bayesian();

// After training...

console.log(bayesClassifier.classify(data, 1)); // Returns explanation with probabilitiesNatural handles text preprocessing while Limdu.js manages classification. Together they are the foundations of intelligent npm AI applications that understand language, learn continuously, and provide analytical insights.

Deploying and Scaling AI Apps with Edge Functions

Image Source: Vercel

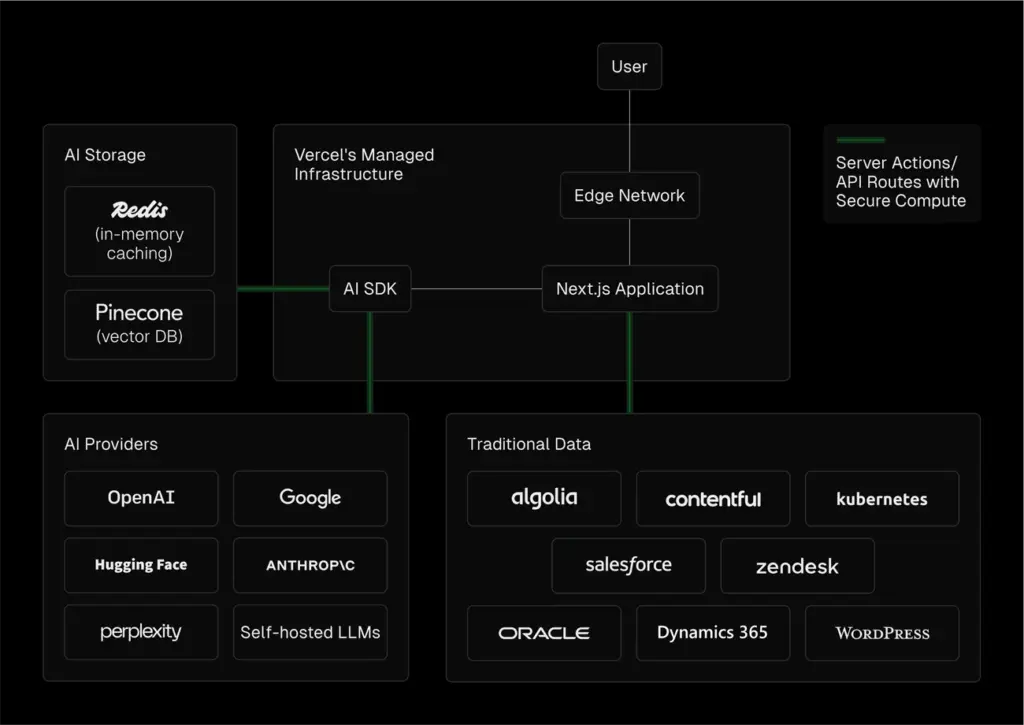

AI applications just need smart infrastructure choices to deliver the best performance and user experience. Vercel’s Edge Functions show a fundamental change in npm AI package deployment that brings computation closer to users.

Using Vercel Edge Runtime for Low-latency AI

Vercel’s Edge Runtime powers Edge Functions with a minimal JavaScript environment that exposes Web Standard APIs. These functions run in regions closest to user requests and deliver responses by a lot faster than traditional serverless solutions. Edge Functions perform almost 40% faster than hot Serverless Functions at a fraction of the cost.

Edge Runtime’s lightweight design runs on Chrome’s V8 engine without MicroVM overhead, making it perfect for AI applications that just need quick responses. Tasks like image generation cost nowhere near as much when running in Edge Functions compared to traditional Serverless Functions – almost 15x less.

Serverless Deployment with npm openai SDKs

Your project configuration should include:

{

"dependencies": {

"ai": "^3.1.0",

"@ai-sdk/openai": "latest"

}

}Environment variables help store API keys securely without hardcoding sensitive information. Implementation patterns show that Node.js environments just need configuration instances to access different methods when importing OpenAI configuration and API.

Vercel’s Regional Edge Functions connect your function to a specific region when you just need database proximity. This eliminates latency between function and database.

Monitoring and Scaling with Vercel Infrastructure

Vercel offers strong tools to maintain AI applications at scale:

- Edge Network: Serves billions of requests globally and delivers dynamic AI workloads at static web speeds

- DDoS Mitigation: Blocks suspicious traffic levels automatically

- Web Application Firewall: Deploys first-party security at the edge without complex integration

Vercel’s serverless deployments handle demanding AI workloads automatically without infrastructure complexity. Their in-function concurrency lets a single Vercel Function process multiple invocations simultaneously to optimize resource use. This approach, called Fluid, maximizes available compute time unlike traditional serverless that wastes idle time.

Enterprise-grade solutions benefit from Secure Compute that creates isolated bridges between Vercel and on-premise backends or Kubernetes services. This feature becomes especially valuable when you have custom model deployment scenarios.

Conclusion

The Future is Now: Making AI Accessible Through npm

This piece shows how npm AI packages have changed the digital world of web application development. JavaScript developers can now build sophisticated AI-powered applications without deep machine learning expertise. The move from server-dependent models to client-side execution brings a fundamental change to intelligent application development.

Npm AI tools make artificial intelligence development available to everyone. TensorFlow.js and Brain.js are the foundations of neural networks, while Natural and Limdu.js handle complex language processing tasks that once needed specialized knowledge. Developers can now focus on creating valuable user experiences instead of dealing with machine learning setup.

The Vercel AI SDK is a game-changer for conversational interfaces. Its streaming features, detailed state management, and framework adaptability make chat applications easy to build. Edge Functions boost these applications by delivering quick responses at a lower cost than traditional deployment.

Security needs careful attention when using AI packages. Recent findings of malicious npm packages targeting developers remind us to check package sources and stay alert with security. On top of that, it’s crucial to manage API keys through environment variables instead of hardcoding credentials in production applications.

The npm AI ecosystem will keep growing with specialized tools and better implementations. The gap between idea and AI-powered implementation gets smaller, letting developers build smarter applications. Becoming skilled at these npm AI packages today sets you up for the next wave of smart application development right around the corner.